cayenne: a Python package for stochastic simulations

cayenne is a Python package for stochastic simulations. It offers a simple API to define models, perform stochastic simulations with them and visualize the results in a convenient manner.

## Install

Install with `pip`:

```bash

$ pip install cayenne

```

## Documentation

- General: https://cayenne.readthedocs.io

- Benchmark repository, comparing `cayenne` with other stochastic simulation packages: https://github.com/Heuro-labs/cayenne-benchmarks

## Usage

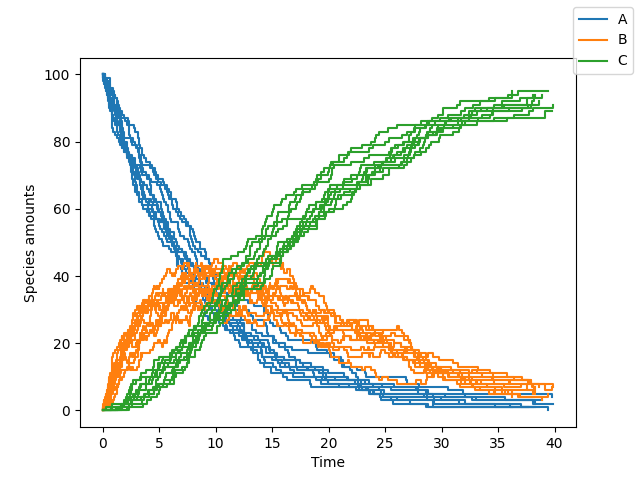

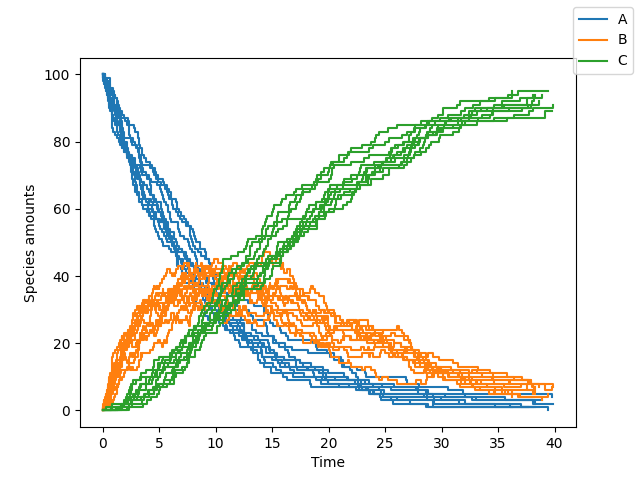

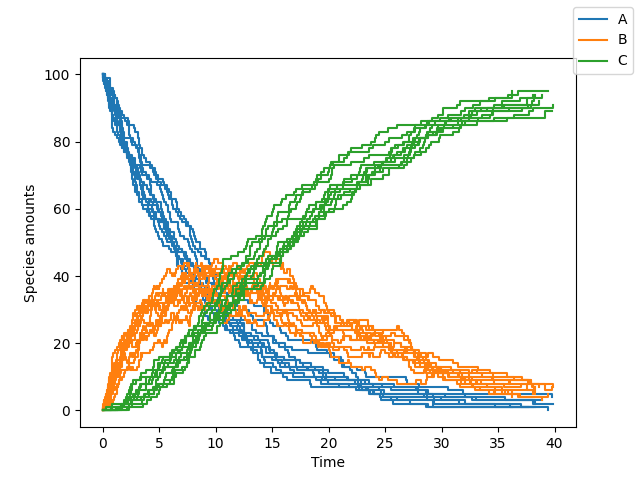

A short summary follows, but a more detailed tutorial can be found [here](https://cayenne.readthedocs.io/en/latest/tutorial.html). You can define a model as a Python string (or a text file, see [docs](https://cayenne.readthedocs.io)). The format of this string is loosely based on the excellent [antimony](https://tellurium.readthedocs.io/en/latest/antimony.html#introduction-basics) library, which is used behind the scenes by `cayenne`.

```python

from cayenne.simulation import Simulation

model_str = """

const compartment comp1;

comp1 = 1.0; # volume of compartment

r1: A => B; k1;

r2: B => C; k2;

k1 = 0.11;

k2 = 0.1;

chem_flag = false;

A = 100;

B = 0;

C = 0;

"""

sim = Simulation.load_model(model_str, "ModelString")

# Run the simulation

sim.simulate(max_t=40, max_iter=1000, n_rep=10)

sim.plot()

```

### Change simulation algorithm

You can change the algorithm used to perform the simulation by changing the `algorithm` parameter (one of `"direct"`, `"tau_leaping"` or `"tau_adaptive"`)

```python

sim.simulate(max_t=150, max_iter=1000, n_rep=10, algorithm="tau_leaping")

```

Our [benchmarks](https://github.com/Heuro-labs/cayenne-benchmarks) are summarized [below](#benchmarks), and show `direct` to be a good starting point. `tau_leaping` offers greater speed but needs specification and tuning of the `tau` hyperparameter. The `tau_adaptive` is less accurate and a work in progress.

### Run simulations in parallel

You can run the simulations on multiple cores by specifying the `n_procs` parameter

```python

sim.simulate(max_t=150, max_iter=1000, n_rep=10, n_procs=4)

```

### Accessing simulation results

You can access all the results or the results for a specific list of species

```python

# Get all the results

results = sim.results

# Get results only for one or more species

results.get_species(["A", "C"])

```

You can also access the final states of all the simulation runs by

```python

# Get results at the simulation endpoints

final_times, final_states = results.final

```

Additionally, you can access the state a particular time point of interest $t$. `cayenne` will interpolate the value from nearby time points to give an accurate estimate.

```python

# Get results at timepoint "t"

t = 10.0

states = results.get_state(t) # returns a list of numpy arrays

```

<h2 id="benchmarks"> Benchmarks </h2>

| | direct| tau_leaping | tau_adaptive |

--- | --- |--- | --- |

cayenne | :heavy_check_mark: Most accurate yet | :heavy_check_mark: Very fast but may need manual tuning| Less accurate than GillespieSSA's version|

Tellurium | :exclamation: Inaccurate for 2nd order | N/A | N/A |

GillespieSSA | Very slow |:exclamation: Inaccurate for initial zero counts | :exclamation: Inaccurate for initial zero counts

BioSimulator.jl | :exclamation: Inaccurate interpolation | :exclamation: Inaccurate for initial zero counts | :exclamation: Inaccurate for initial zero counts

## License

Copyright (c) 2018-2020, Dileep Kishore, Srikiran Chandrasekaran. Released under: Apache Software License 2.0

## Credits

- [Cython](https://cython.org/)

- [antimony](https://tellurium.readthedocs.io/en/latest/antimony.html)

- [pytest](https://docs.pytest.org)

- [Cookiecutter](https://github.com/audreyr/cookiecutter)

- [audreyr/cookiecutter-pypackage](https://github.com/audreyr/cookiecutter-pypackage)

- [black](https://github.com/ambv/black)

- Logo made with [logomakr](https://logomakr.com/)

MiCoNE - Microbial Co-occurrence Network Explorer

MiCoNE is a Python package for the exploration of the effects of various possible tools used during the 16S data processing workflow on the inferred co-occurrence networks.

It is also developed as a flexible and modular pipeline for 16S data analysis, offering parallelized, fast and reproducible runs executed for different combinations of tools for each step of the data processing workflow.

It incorporates various popular, publicly available tools as well as custom Python modules and scripts to facilitate inference of co-occurrence networks from 16S data.

- Free software: MIT license

- Documentation: https://micone.readthedocs.io/

The MiCoNE framework is introduced in:

Kishore, D., Birzu, G., Hu, Z., DeLisi, C., Korolev, K., & Segrè, D. (2023). Inferring microbial co-occurrence networks from amplicon data: A systematic evaluation. mSystems. doi:10.1128/msystems.00961-22.

Data related to the publication can be found on Zenodo: https://doi.org/10.5281/zenodo.7051556.

## Features

- Plug and play architecture: allows easy additions and removal of new tools

- Flexible and portable: allows running the pipeline on local machine, compute cluster or the cloud with minimal configuration change through the usage of [nextflow](www.nextflow.io)

- Parallelization: automatic parallelization both within and across samples (needs to be enabled in the `nextflow.config` file)

- Ease of use: available as a minimal `Python` library (without the pipeline) or as a full `conda` package

## Installation

Installing the `conda` package:

```sh

mamba env create -n micone -f https://raw.githubusercontent.com/segrelab/MiCoNE/master/env.yml

```

> NOTE:

>

> 1. MiCoNE requires the `mamba` package manager, otherwise `micone init` will not work.

> 2. Direct installation via anaconda cloud will be available soon.

Installing the minimal `Python` library:

```sh

pip install micone

```

> NOTE:

> The `Python` library does not provide the functionality to execute pipelines

## Workflow

It supports the conversion of raw 16S sequence data into co-occurrence networks.

Each process in the pipeline supports alternate tools for performing the same task, users can use the configuration file to change these values.

## Usage

The `MiCoNE` pipelines comes with an easy-to-use CLI. To get a list of subcommands you can type:

```sh

micone --help

```

Supported subcommands:

1. `install` - Initializes the package and environments (creates `conda` environments for various pipeline processes)

2. `init` - Initialize the nextflow templates for the micone workflow

3. `clean` - Cleans files from a pipeline run (cleans temporary data, log files and other extraneous files)

4. `validate-results` - Check the results of the pipeline execution

### Installing the environments

In order to run the pipeline various `conda` environments must first be installed on the system.

Use the following comand to initialize all the environments:

```sh

micone install

```

Or to initialize a particular environment use:

```sh

micone install -e "micone-qiime2"

```

The list of supported environments are:

- micone-cozine

- micone-dada2

- micone-flashweave

- micone-harmonies

- micone-mldm

- micone-propr

- micone-qiime2

- micone-sparcc

- micone-spieceasi

- micone-spring

### Initializing the pipeline template

To initialize the full pipeline (from raw 16S sequencing reads to co-occurrence networks):

```sh

micone init -w <workflow> -o <path/to/folder>

```

Other supported pipeline templates are (work in progress):

- full

- ni

- op_ni

- ta_op_ni

To run the pipeline, update the relevant config files (see next section), activate the `micone` environment and run the `run.sh` script that was copied to the directory:

```sh

bash run.sh

```

This runs the pipeline locally using the config options specified.

To run the pipeline on an SGE enabled cluster, add the relevant project/resource allocation flags to the `run.sh` script and run as:

```sh

qsub run.sh

```

## Configuration and the pipeline template

The pipeline template for the micone "workflow" (see previous section for list of supported options) is copied to the desired folder after running `micone init -w <workflow>`.

The template folder contains the following folders and files:

- nf_micone: Folder contatining the `micone` default configs, data, functions, and modules

- templates: Folder containing the templates (scripts) that are executed during the pipeline run

- main.nf: The pipeline "workflow" defined in the `nextflow` DSL 2 specification

- nextflow.config: The configuration for the pipeline. This file needs to be modified in order to change any configuration options for the pipeline run

- metadata.json: Contains the basic metadata that describes the dataset that is to be processed. Should be updated accordingly before pipeline execution

- samplesheet.csv: The file that contains the locations of the input data necessary for the pipeline run. Should be updated accordingly before pipeline execution

- run.sh: The `bash` script that contains commands used to execute the `nextflow` pipeline

The folder `nf_micone/configs` contains the default configs for all the `micone` pipeline workflows.

These options can also be viewed in tabular format in the [documentation](https://micone.readthedocs.io/en/latest/usage.html#configuring-the-pipeline).

For example, to change the tool used for OTU assignment to `dada2` and `deblur`, you can add the following to `nextflow.config`:

```groovy

// ... config initialization

params {

// ... other config options

denoise_cluster {

otu_assignment {

selection = ['dada2', 'deblur']

}

}

}

```

Example configuration files used for the analyses in the manuscript can be found [here](https://github.com/segrelab/MiCoNE-pipeline-paper/tree/master/scripts/runs).

## Visualization of results (coming soon)

The results of the pipeline execution can be visualized using the scripts in the [manuscript repo](https://github.com/segrelab/MiCoNE-pipeline-paper/tree/master/scripts)

## Know issues

1. If you have a version of `julia` that is preinstalled, make sure that it does not conflict with the version downloaded by the `micone-flashweave` environment

2. The data directory (`nf_micone/data`) needs to be manually downloaded using this [link](https://zenodo.org/record/7051556/files/data.zip?download=1).

## Credits

This package was created with [Cookiecutter](https://github.com/audreyr/cookiecutter) and the [audreyr/cookiecutter-pypackage](https://github.com/audreyr/cookiecutter-pypackage) project template.